This slideshare introduction is quite interresting. It explains how K-Means algorithm works.

(Credit to Subhas Kumar Ghosh)

One common problem with Hadoop, is the unexplained hang when running a sample job. For instance, I’ve been testing Mahout (cluster-reuters) on a Hadoop multinode cluster (1 namenode, 2 slaves). A sample trace in my case looks like this listing:

15/10/17 12:09:06 INFO YarnClientImpl: Submitted application application_1445072191101_0026

15/10/17 12:09:06 INFO Job: The url to track the job: http://master.phd.net:8088/proxy/application_1445072191101_0026/

15/10/17 12:09:06 INFO Job: Running job: job_1445072191101_0026

15/10/17 12:09:14 INFO Job: Job job_1445072191101_0026 running in uber mode : false

15/10/17 12:09:14 INFO Job: map 0% reduce 0%

The jobs web console told me that the job State=Accepted, Final Status = UNDEFINED and the tracking UI was UNASSIGNED.

First thing I suspected, was a warning thrown by hadoop binary:

WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Absolutely nothing to do with my problem. I rebuilt this jar from sources, but the job still hangs.

I reviewed the namenode logs. Nothing special. Then the Yarn different logs(resourcemanager, nodemanager). No problems. Slaves logs. Same thing.

As we don’t have much information from Hadoop logs, I went through the net for similar problems. It seems that this is a common problem related to memory configuration. I wonder why such problems are not yet logged (Hadoop 2.6). Even if I analyzed the memory consumption using JConsole, but nothing was alarming with it.

All the used machines are CentOS 6.5 virtual machines hosted on a 16 Gb RAM, i7-G5 laptop. After connecting and configuring the three machines, I realized that the allowed disk space (15Gb for the namenode, 6Gb for slaves ) is not enough. Checking the disk space usage (df -h), only 3% of the disk space were available on the two slaves. This could be an issue, but Hadoop reports such errors.

Looking in yarn-site.xml, I remembered that I gave Yarn 2Gb to run this test jobs.

<property> <name>yarn.nodemanager.resource.memory-mb</name> <value>2024</value> </property> <property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>512</value> </property>

I tried doubling this value on the namenode:

<property> <name>yarn.nodemanager.resource.memory-mb</name> <value>4096</value> </property> <property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>512</value> </property>

Then I propagated changes to slaves and restarted everything (stop-dfs.sh && stop-yarn.sh && start-yarn.sh && start-dfs.sh). then

./cluster-reuters.sh

It works, finally 🙂

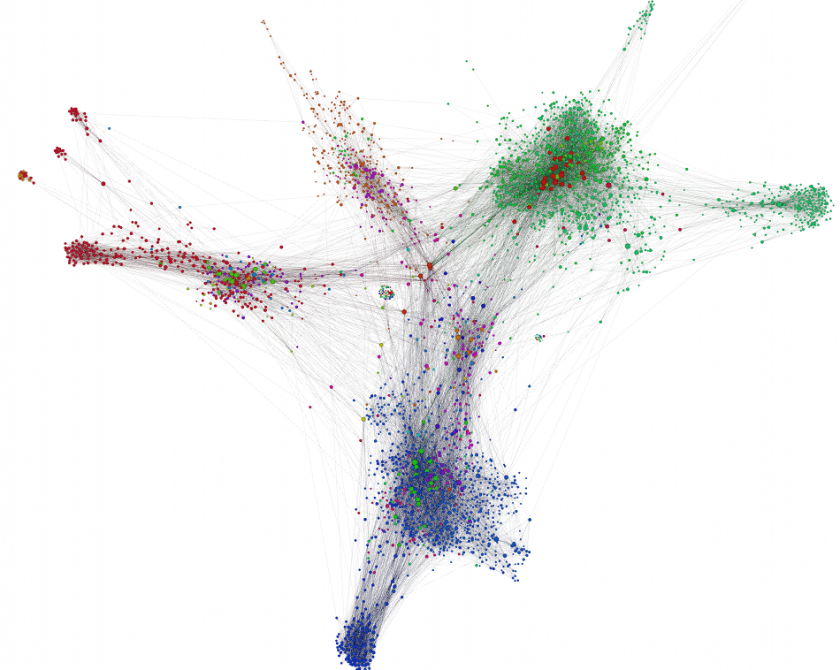

Now, I’m trying to visualize the clustering results using Gephi right now.